Articles

Go beyond 9s service level objectives, recovery time objectives and recovery point objectives. Improve your resilience decisions with failure impact narratives.

Go beyond 9s service level objectives, recovery time objectives and recovery point objectives. Improve your resilience decisions with failure impact narratives.

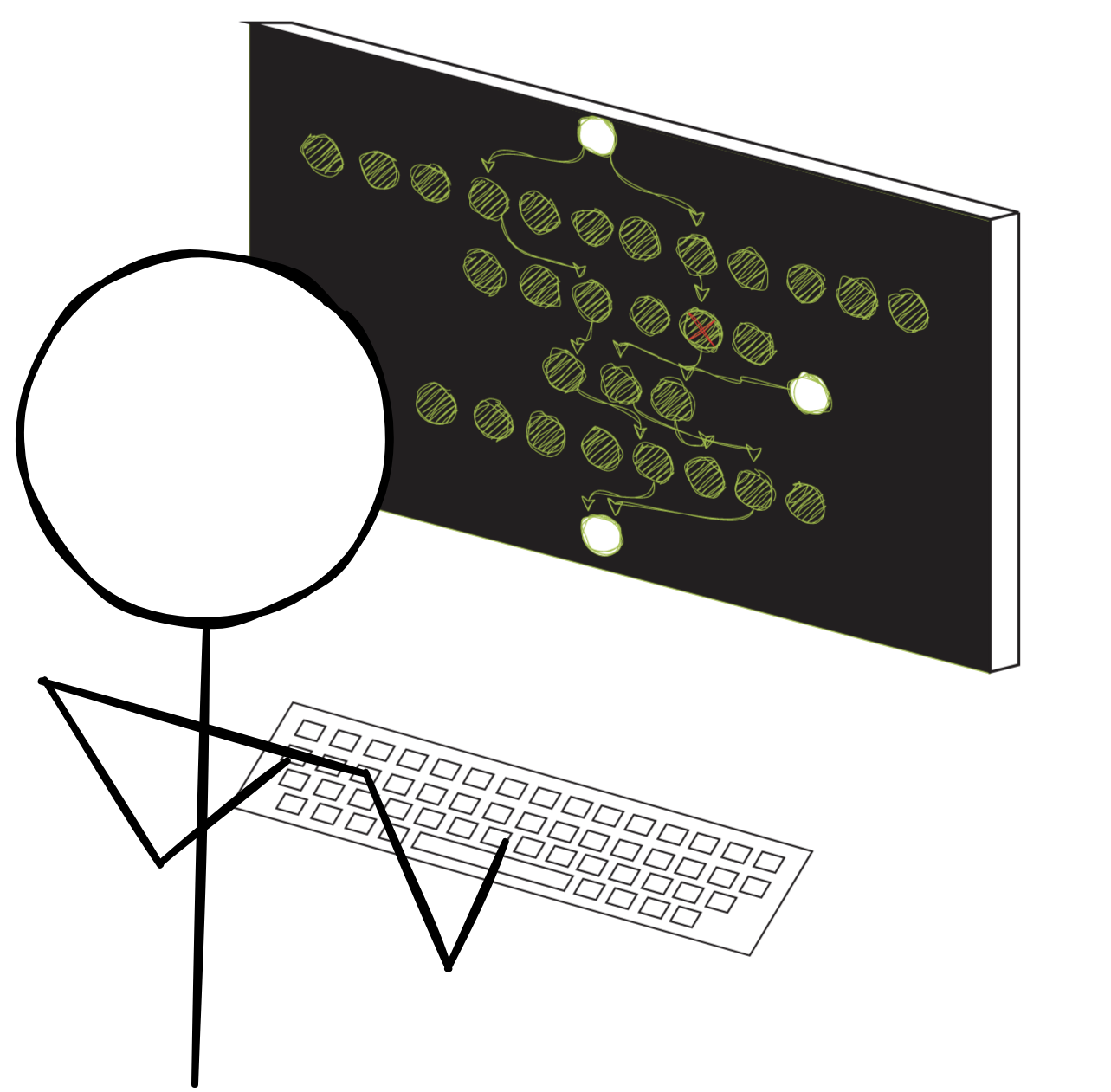

Cloud networks, and most massively scaled networks, are subject to partial failures that will impact some of your application connections but not others. Congestion will lead to intermittent packet loss and is another flavor of partial impact that occurs in multi-tenant environments. How do you know if your monitoring, health checks and failure mitigations, like host removal and retry, will mitigate these kinds of failures? You test! This article will show you how you can use Linux IPTables, routing policies and network control to simulate cloud network failures.

Massively scaled cloud networks are composed of thousands of network devices. Network failures (usually) show up in tricky ways that your monitoring won't detect. These failures (usually) lead to minor client impacts. However, some critical applications, like strict data consistency database clusters, are sensitive to even these minor disruptions. This article will explain the why behind the funny behaviors you might have noticed in your applications running in the cloud or over the Internet. This article includes recommendations for how to improve application resilience to detect and mitigate these (usually) minor failures in the massive networks they depend on.

Go beyond 9s service level objectives, recovery time objectives and recovery point objectives. Improve your resilience decisions with failure impact narratives.

Failure categories are a simplified approach to understanding distributed systems failures and what to do about them. Without failure categories, failure mode analysis and system design tends to operate off of a list of thousands of specific failures, which tends toward one-off approaches to failure detection and mitigation. Failure categories can help you design a few common approaches to detect and mitigate a wider range of failures.

Critical applications that need <5 minute recovery times for a wide range of failures need special monitoring. Many teams may not realize their monitoring package could fail due to the same underlying issue that impacts their application. They may not realize their metrics cannot detect and alert them to partial failures. This article will explain trade-offs and design recommendations for monitoring critical applications.

When Code & Config changes cause a failure, rollbacks are a slow and unreliable mitigation. The best protection against change related failures isn't automated rollbacks, instead use better fault isolation and redundant capacity.